Difference between revisions of "X1011"

(→Tips) |

(→Tips) |

||

| Line 13: | Line 13: | ||

The X1011 currently does not support all RAID types, including RAID 1 and ZFS. This might be due to driver compatibility issues, which are yet to be confirmed and advised. The X1011 is a collection of four M.2 SSD drives recognized as separate drives by the OS. It uses the ASM1184e PCI express packet switch, with 1 PCIe x1 Gen2 upstream port to 4 PCIe x1 Gen2 downstream ports, enabling users to extend PCIe ports on a Raspberry Pi 5. | The X1011 currently does not support all RAID types, including RAID 1 and ZFS. This might be due to driver compatibility issues, which are yet to be confirmed and advised. The X1011 is a collection of four M.2 SSD drives recognized as separate drives by the OS. It uses the ASM1184e PCI express packet switch, with 1 PCIe x1 Gen2 upstream port to 4 PCIe x1 Gen2 downstream ports, enabling users to extend PCIe ports on a Raspberry Pi 5. | ||

| − | To verify whether it is a hardware problem: | + | '''To verify whether it is a hardware problem:''' |

1. Clear any RAID settings and mount each drive as a separate volume. | 1. Clear any RAID settings and mount each drive as a separate volume. | ||

Revision as of 17:37, 1 November 2024

Contents

Tips

The X1011 currently does not support all RAID types, including RAID 1 and ZFS. This might be due to driver compatibility issues, which are yet to be confirmed and advised. The X1011 is a collection of four M.2 SSD drives recognized as separate drives by the OS. It uses the ASM1184e PCI express packet switch, with 1 PCIe x1 Gen2 upstream port to 4 PCIe x1 Gen2 downstream ports, enabling users to extend PCIe ports on a Raspberry Pi 5.

To verify whether it is a hardware problem:

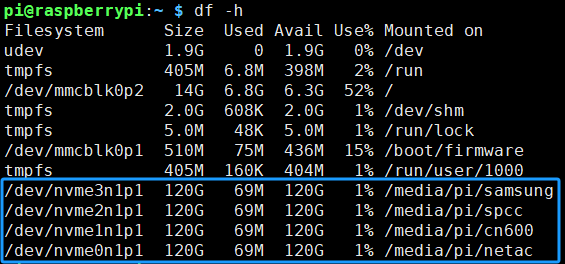

1. Clear any RAID settings and mount each drive as a separate volume.

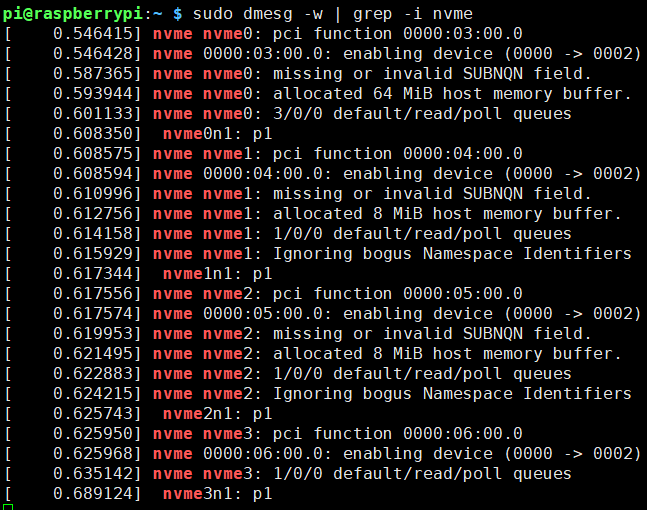

2. Open a second terminal to Monitor for any NVMe errors (I/O timeout, reset controller, I/O error, etc.):

pi@raspberrypi ~ $ sudo dmesg -w | grep -i nvme

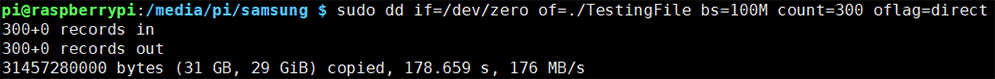

3. Create a 30GB testing file on one of the SSDs:

pi@raspberrypi ~ $ sudo dd if=/dev/zero of=./TestingFile bs=100M count=300 oflag=direct

4. Copy the 30GB file to multiple SSDs simultaneously:

pi@raspberrypi ~ $ echo /media/pi/cn600/ /media/pi/spcc/ /media/pi/netac/ | xargs -n 1 cp ./TestingFile

Overview

Enhance your Raspberry Pi 5 with effortless installation and lightning-fast PCIe storage speeds!

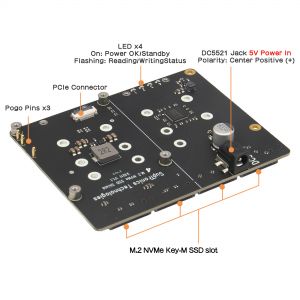

The X1011 four M.2 NVMe SSD shield, designed to provide a mass-capacity storage and high-speed storage solution for your Raspberry Pi 5. Its sleek and compact design enables easy attachment of four full-size M.2 2280 SSDs to your Raspberry Pi 5. With its PCIe 2.0 interface, you can experience data transfer rates of up to 5Gbps, allowing you to effortlessly transfer large amounts of data within seconds.

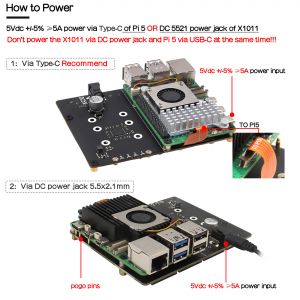

The X1011 connects to the underside of the Raspberry Pi 5, eliminating the need for a passthrough for the GPIO. This means you can use your favorite HATs while also utilizing this expansion board. Moreover, the X1011 offers versatile power options – it can draw power from the Raspberry Pi5 through pogo pins using a USB-C power supply, or alternatively, power the Raspberry Pi5 from the X1011 using a DC power adapter via the onboard DC power jack, streamlining the power supply process to a single source.

The X1011 is an ideal storage solution for creating a home media center or building a network-attached storage (NAS) system. It allows you to store and stream your own videos, music, and digital photos within your home or even remotely across the world.

Geekworm PCIe to NVME Sets:

After the Raspberry Pi AI Kit launched, we put four PiPs (X1001, X1004, X1011, M901) through testing; only X1011 does not support the Hailo-8 AI accelerator.

It should be noted that X1004 uses ASMedia ASM1182e PCIe switch, and X1011 uses ASM1184e, they can't support PCIe Gen 3 speed, so even though we forced to enable PCIe Gen 3.0 setting in Raspberry Pi 5, it is limited by ASMedia ASM1182e PCIe switch, and speed is still PCIe Gen 2.0 5Gbps speed. when you use an hailo-8 ai accelerator, Raspberry Pi Fundation highly recommends using PCIe 3.0 to achieve best performance with your AI Kit.

Our tentative conclusions are as follows:

- If you need to use hailo-8 ai accelerator with high performance, it is recommended to use X1015/X1002/X1003/M901/ the official M.2 HAT+ etc. When choosing these PIP boards, you should focus on whether there is a conflict between the camera cable and the PIP board installation, and enable PCIe3.0 to use hailo-8 ai accelerator. At the same time, you need to prepare an SD card as the system disk.

- If you don't care about the high performance brought by PCIe 3.0, then you can consider using X1004, so that you can use any socket of X1004 to install NVME SSD as the system disk, and another socket to install hailo-8 ai accelerator, so as to have both.

*Caution: The Matching Case section only indicates compatibility between the case, PCIe Peripheral Board and Raspberry Pi 5 Board, and excludes products in the Product Matching Reference section. Compatibility of these referenced products with the case is subject to their actual specifications.

| Model | Compatible with | Position | NVMe M2 SSD Length Support | Matching Case | Matching Cooler | Support NVMe Boot | Support PCIe 3.0 | Support Hailo-8 AI Accelerator | Product Matching Reference |

|---|---|---|---|---|---|---|---|---|---|

| X1000 | Raspberry Pi 5 | Top | 2230/2242 | P579 | Official Cooler / Argon THRML Cooler / H501 / H505 / H509 / H510 | Yes | - | Not tested | |

| X1001 | Raspberry Pi 5 | Top | 2230/2242/2260/2280 | P579 | Official Cooler / Argon THRML Cooler / H501 / H505 / H509 / H510 | Yes | - | Yes | |

| X1002 | Raspberry Pi 5 | Bottom | 2230/2242/2260/2280 | P580 / P580-V2 |

Official Cooler / Argon THRML Cooler / H501 / H505 / H509 / H510 | Yes | - | NO | |

| X1003 | Raspberry Pi 5 | Top | 2230/2242 | P579 / P425 | Official Cooler / H501 / H510 Only | Yes | - | Not tested | |

| X1004 | Raspberry Pi 5 | Top | Dual ssd: 2280 | P579-V2 | Official Cooler / Argon THRML Cooler / H501 / H505 / H509 / H510 | Yes (Requires EEPROM 2024/05/17 and later version) | NO | Yes | |

| X1005 | Raspberry Pi 5 | Bottom | Dual ssd: 2230/2242/2260/2280 | P580-V2 | Official Cooler / Argon THRML Cooler / H501 / H505 / H509 / H510 | Yes (Requires EEPROM 2024/05/17 and later version) | NO | Yes | |

| X1011 | Raspberry Pi 5 | Bottom | 4 ssds: 2230/2242/2260/2280 | X1011-C1 | Official Cooler / Argon THRML Cooler / H501 / H505 / H509 / H510 | Yes (eeprom 2024/05/17 and later version) | NO | NO |

|

| X1012 | Raspberry Pi 5 | Top | 2230/2242/2260/2280 | P579 | Official Cooler / Argon THRML Cooler / H501 / H505 / H509 / H510 | Yes | - | Not tested | |

| X1015 | Raspberry Pi 5 | Top | 2230/2242/2260/2280 | P579 | Official Cooler / Argon THRML Cooler / H501 / H505 / H509 / H510 | Yes | - | Yes | |

| M901 | Raspberry Pi 5 | Top | 2230/2242/2260/2280 | P579 | Official Cooler / Argon THRML Cooler / H501 / H505 / H509 / H510 | Yes | - | Yes | - |

| Q100 | Raspberry Pi 5 | Top | 2242 | P579 | Official Cooler / Argon THRML Cooler / H501 / H505 / H509 / H510 | Yes | - | Not tested | - |

| Q200 | Raspberry Pi 5 | Top | Dual ssd: 2280 | P579 | Official Cooler / Argon THRML Cooler / H501 / H505 / H509 / H510 | NO | - | Not tested | - |

| M300 | Raspberry Pi 5 | Top | 2230/2242 | P579 | Official Cooler / Argon THRML Cooler / H501 / H505 / H509 / H510 | Yes | - | Not tested | - |

| M400 | Raspberry Pi 5 | Top | 2230/2242/2280 | P579 | Official Cooler / Argon THRML Cooler / H501 / H505 / H509 / H510 | Yes | - | Not tested | - |

Features

| For use with |

Raspberry Pi 5 Model B |

| Key Features |

PS: The X1011 hardware has no limit on NVME SSD capacity, which is dependent on the Raspberry Pi OS. |

| Ports & Connectors |

|

| How to Power |

Don't power the X1011 via DC powe jack and the Raspberry Pi5 via USB-C at the same time. |

| Important Notes |

|

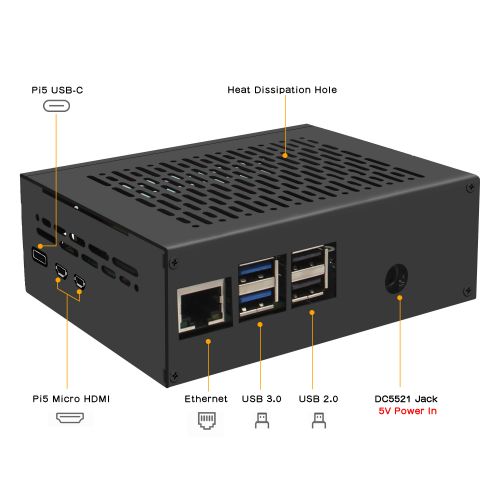

Matching Case

This is a X1011-C1 Metal Case for Raspberry Pi 5 & X1011 pcie to nvme shield only.

Note: Because it is a metal case, it will shield the WiFi signal, please use wired Ethernet.

Packing List of X1011-C1:

- 1 x Metal Case

- 2 x M2.5*6+3 Female/Male Space

- 2 x M2.5*6 Female/Female Space

- 4 x KM2.5*4 Screw

- 4 x pads with a diameter of 8 mm

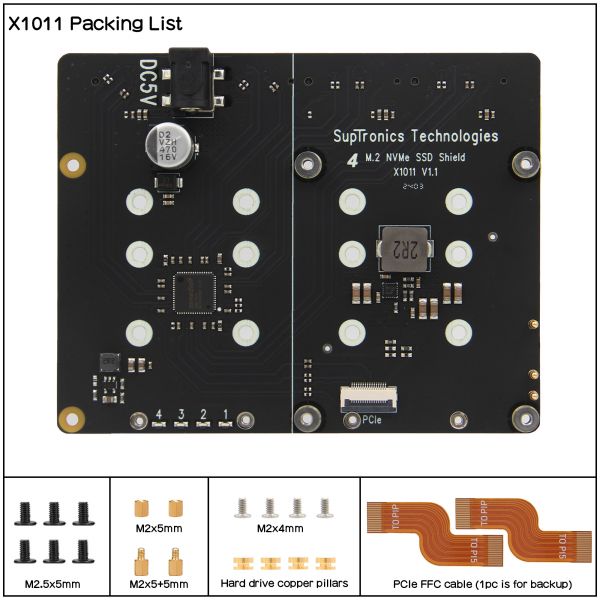

Packing List

- 1 x X1011 V1.1 M.2 NVMe 4 SSD shield

- 2 x 37mm PCIe FFC cable (1pc is for backup)

- 8 x M2.5*5 Screws

- 2 x M2.5*5 Female/Female Spacer

- 2 x M2.5*5+5 Male/Female Spacer

- 4 x M2*4 Screws

- 4 x M2 Copper Nut

User Manual

- Dimensions source file (DXF): File:X1011-pcb.dxf - You can view it with Autodesk Viewer online

- Hardware: X1011-hardware

- Software: How to make X1011 work: Software Tutorials

- From Jeff's review video: https://www.youtube.com/watch?v=yLZET7Jhza8

- Installation Video of X1011+X1011-C1: https://youtu.be/tw_KbqDN9rc

Related links

- 4-way NVMe RAID comes to Raspberry Pi 5

- Geekworm X1011 board adds up to four NVMe SSDs to the Raspberry Pi 5

- Add-on board lets you use four NVMe SSDs at once with Raspberry Pi 5

- Geekworm X1011 PCIe to Four M.2 NVMe SSD HAT

Test & Reviews

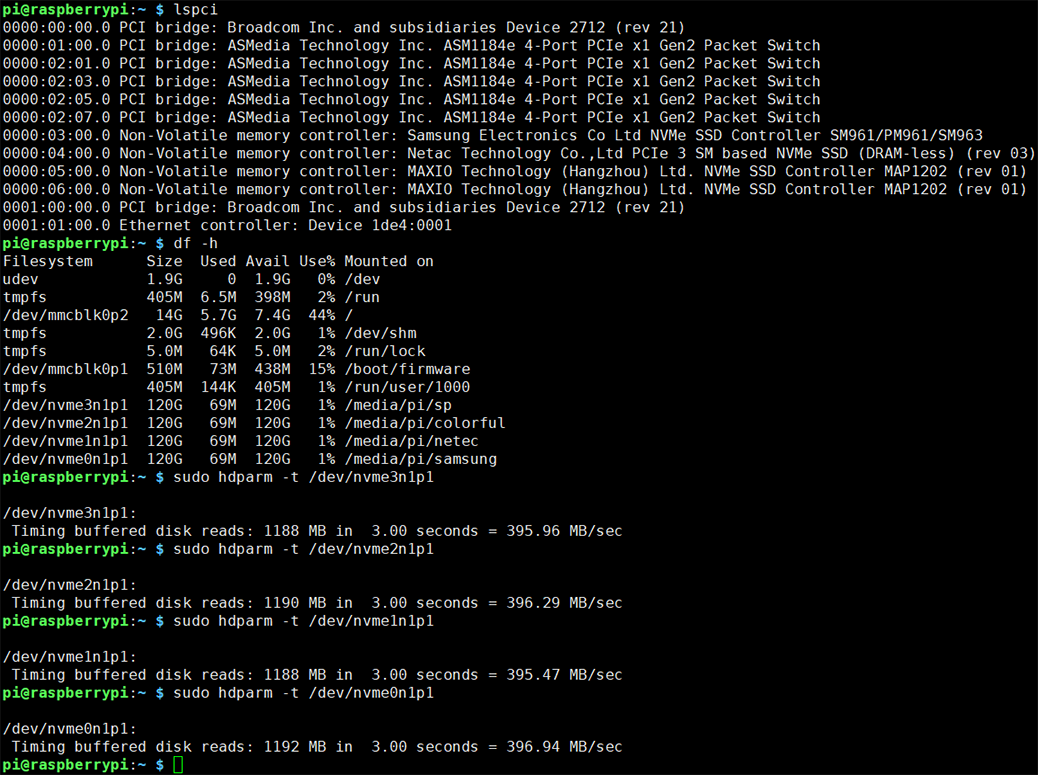

Test Conditions

- System board details: Raspberry Pi 5 Model B Rev 1.0 , 4G RAM

- Interface board details: X1011 v1.1 NVMe dual SSD Shield

- Operation system: Raspberry Pi OS with desktop (Debian12 (bookworm), 64bit, Release date: December 5th 2023)

- Storage details: Colorful CN600 120G, Samsung PM961 120G, Netec N930E 120G, Silicon Power P34A60 120GB

Testing disk drives read speed at PCIe2.0 with hdparm

FAQ

Q: Why is PCIE SWITCH 3.0 not used?

A: Cost reasons. X1011 use pcie 2.0 is based on cost considerations, in the design and production of X1011 in the Chinese market pcie 3.0 ic price close to 30 dollars (now the price should be lower, in addition, we are a small batch production manufacturing, there is no IC purchasing bargaining power), is pcie 2.0 ic price 6 times or more. We think the final selling price is too high to be accepted by consumers; another point to note is that PI5 is certified to support PCIE 2.0 only, not PCIE 3.0.

Q: Why does the X1011 use a DC Jack instead of type-c?

A: We think about it more. TYPE-C is limited to 5A, if 4 NVME SSDs are read/written at the same time + motherboard + fan, is the TYPE-C power supply enough at peak? But DC JACK can provide more than 5A power supply, is there an extra option with DC JACK?

Enable comment auto-refresher

Anonymous user #20

Permalink |

Anonymous user #21

Anonymous user #20

Permalink |

Anonymous user #19

Permalink |

Lisa

Anonymous user #18

Permalink |

Lisa

Anonymous user #17

Permalink |

Lisa

Anonymous user #16

Permalink |

Lisa

Anonymous user #15

Permalink |

Anonymous user #14

Permalink |

Harry

Harry

Anonymous user #12

Permalink |

Anonymous user #12

Anonymous user #13

Anonymous user #11

Permalink |

Harry

Anonymous user #10

Permalink |

Lisa

Anonymous user #9

Permalink |

Lisa

Harry

Anonymous user #8

Permalink |

Lisa

Anonymous user #7

Permalink |

Lisa

Anonymous user #5

Permalink |

Lisa

Anonymous user #4

Permalink |

Anonymous user #2

Permalink |

Lisa

Anonymous user #2

Anonymous user #1

Permalink |

Lisa

Anonymous user #3

Anonymous user #6